Two weeks ago in Santa Clara, California, the AI Infrastructure Summit took place to see what comes next for the future of our AI build out.

Two weeks ago in Santa Clara, California, the AI Infrastructure Summit took place to see what comes next for the future of our AI build out.

It’s a big deal because the big players in AI infrastructure were all there from Meta to OpenAI, but also a number of smaller, important and upcoming players that are names you should know about because they’ll be the names I expect will be hitting the IPO roadshows over the next year.

“AI is kicking our butts,” admitted the Vice-President of engineering at Meta.

Then Richard Ho, head of hardware at OpenAI, was talking about Met’s giant Prometheus datacentre, and he summed up the whole event:

“I don’t think that’s enough.

“It doesn’t appear clear to us that there is an end to the scaling model. It just appears to keep going. We’re trying to ring the bell and say, ‘It’s time to build.’”

Even OpenAI with Microsoft’s billions and one of the world’s largest GPU footprints is telling us they can’t keep up.

This is why paying attention to these conferences and summits is important. You get the people very literally building out AI infrastructure together to figure out just how in the heck they can keep up, let alone get ahead of the pace of AI development.

AI isn’t just advancing faster than anyone expected. It’s advancing faster than the very infrastructure meant to power it.

That creates (still) one of the single biggest chances you’ll see in history to build meaningful, long-term wealth.

But you’ve heard of Meta, OpenAI, Nvidia. You don’t need me to paint the picture for you on the bullish case for them.

Which are the next wave of AI infrastructure companies you need to know about? The ones that might not be listed right now, but you can expect to see hitting the IPO markets and the stock markets in the next year.

That’s what I’m looking at today, the next big stars of the AI market and names you need to pin to your investing mood boards to keep a close eye on.

The new wave of AI stars

We’ve all heard about Nvidia’s AI chips and the scramble for more.

But the Summit shone a rare light on the lesser-known players working to fix AI’s hidden bottlenecks, the ones that don’t make headlines but will decide who can actually scale.

They tap areas like memory, storage, processing and design, every crucial aspect of how AI infrastructure is built and functions in our world today… and tomorrow.

Kove

Kove is a private Palo Alto startup. Their software-defined memory pools RAM across entire server farms. In practice, that means a GPU with limited on-board memory can suddenly tap terabytes (even petabytes) sitting on other machines.

CEO John Overton put it bluntly: “GPUs are scaling, CPUs are scaling… memory has not. As long as we think about memory as stuck in the box, we’ll remain stuck in the box”.

Kove’s pitch is to give every GPU “virtually unlimited memory.” Imagine what that means when models double in size again next year. Their Kove:SDM is their flagship product, and it’s already proven to show 5x better performance than existing DRAM can.

Memory is a big, big deal, and we’re seeing that play out in the market now as Micron, SK Hynix and Samsung all shift up a gear. Should Kove IPO (as I expect) I think they’ll be on a fast-track path higher in value too.

Pliops

Pilops is an Israeli hardware firm founded in 2017 with the aim to improve the way data is processed and how storage is utilised.

Its new LightningAI appliance effectively adds a long-term memory tier beneath GPU high-bandwidth memory.

Instead of recomputing or reloading from scratch, large language models and AI models can fetch cached data straight from fast storage drives.

In simple terms it means more users per GPU, lower latency, and efficiency gains on data access and processing up to 8x.

In a world where GPU time is almost as important as the GPU itself, it’s a hugely promising development on how data moves.

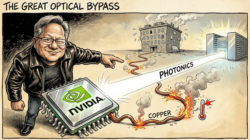

Lightmatter

Then there’s Lightmatter out of Boston. They’re betting on photonics as being the next generation of “superchips”. These chips move data with light instead of electrons.

Their “Passage M1000 3D Photonic Superchip” connects thousands of accelerators with optical lanes that dwarf what copper can handle.

More bandwidth, less power. And they’re building an entire platform around the Passage chips, somewhat akin to the CUDA platform Nvidia built around their GPUs.

With a $4.4 billion valuation already, Lightmatter is no small fry, and its high-tech chip development. But its positioning itself as a new approach to chip design and manufacturing that could be that game changing tech that helps AI infrastructure builders catch up to the AI that’s “kicking their butts”.

Cadence

Also, you should probably get to know Cadence Design Systems if you don’t already.

But I must make a confession here…

Cadence isn’t new, they’re not small, and by no means part of the next wave, they’re very much part of the current wave.

You can find their stock Cadence Design Systems (NASDAQ:CDNS) on the NASDAQ and a chunky $95 billion valuation. But don’t let that fool you, they’re as important to the future of AI as any smaller upstart.

At the Summit they were showing off bleeding-edge HBM3E memory controllers and talking a lot about their “digital twin” ecosystems.

Cadence is one of those invisible giants you only appreciate once someone opens the door. Behind every Nvidia GPU or Apple silicon chip is Cadence software.

They provide the tools that make the design of cutting-edge AI processors possible.

Today, Cadence is pushing further into “digital twin” ecosystems, where entire chip architectures can be modelled, tested, and refined virtually before a single wafer is produced. Their advancements promise not just faster chip design cycles, but smarter, more powerful, and more efficient AI chips for the future.

A never-ending cycle of spending, profits and upgrades

AI infrastructure isn’t a one-and-done investment cycle…

It’s perpetual.

Every hyperscaler, from Amazon to Meta to OpenAI to Oracle, will be writing billion-dollar checks year after year just to stay close to the bleeding edge. That makes the companies selling the gear they pack into these AI warehouses, whether its GPUs, photonics, memory systems, or software to make it all run smoothly, the most consistent winners of the boom.

Also, while there’s clearly big money to be made on the legacy names, pay close attention to the private names too.

Kove, Pliops, Lightmatter.

Just three to start with.

They aren’t household names, yet.

But they’re developing tech that makes the next wave of AI buildout so exciting. And they’re names that giants like Nvidia can’t ignore.

That’s also why when you look through their investors lists, you see Nvidia popping up regularly.

That makes them prime candidates for IPOs or acquisitions. Hopefully the former so you can get a share of the action.

My take is they’ll IPO before acquisition.

If 2025 has been the comeback year for IPOs, expect the remainder of the year and 2026 to be the year of the Super IPO and one that will go down in history as one of the great bull markets of all time.

Until next time,

Sam Volkering

Contributing Editor, Investor’s Daily

P.S. We’ve spent a lot of time discussing the UK’s recession risk, fiscal mismanagement, and how government policies may crush certain parts of the economy. But not everything is doom and gloom. Some sectors are quietly booming — none more so than AI. What’s happening in Silicon Valley could soon rewrite the playbook for wealth in Britain. That’s why I’m urging UK investors to tune into what James Altucher has to say in this briefing — he’s found a window of opportunity that could help you stay on the right side of the next big wealth shift. Watch here before the Budget hits.