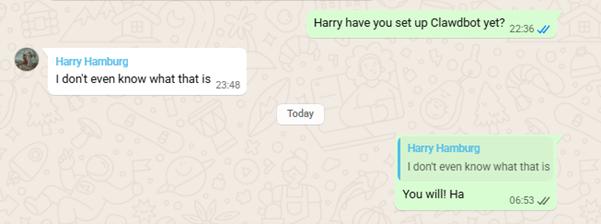

I’m going to start today’s essay with a screenshot. It’s a small snippet of a chat with myself and a former colleague of mine, Harry Hamburg.

Harry’s my go-to guy for testing and trying out new AI things.

He’s also very experienced with the AI platform Claude. If you don’t know Claude it’s the AI from Anthropic (Amazon is a heavy investor), and it’s similar to ChatGPT (from OpenAI) and Grok (from xAI) and Gemini (from Google).

Claude, ChatGPT, Grok and Gemini make up the current “Big Four” AI models.

But step into the spotlight, Clawdbot.

The fact Harry didn’t even know about it means I’m really early on this, but also you’re now really early on this.

I guess I should explain what the heck Clawdbot is and why you should give two-hoots.

A step closer to AGI

Over the last week, Clawdbot kept coming up in my various feeds. Once I looked into it, it became obvious why.

Clawdbot is a new open-sourced AI that was recently released into the wild.

This is not another chatbot, despite the name.

At its core, Clawdbot is a self-hosted AI assistant that runs on your own hardware, a Mac mini, a PC, a Raspberry Pi, or a low-cost cloud server.

That idea of self-hosting is important, so hold onto that thought.

With Clawdbot, instead of living in a browser tab, it lives inside the tools you already use every day, WhatsApp, Telegram, Slack, iMessage, GitHub, Teams and more APIs coming on board at great speed .

The crazy thing here is that it remembers what you tell it. It builds context over time. It does not reset after each conversation.

People are using it to monitor flights, trigger alerts, organise files, draft emails, fill out forms, manage calendars, run scripts, and interact with other software on their machine.

Crucially, it does not just wait for instructions. It is a proactive AI assistant.

It’s just hitting that point of awareness, but it was created late last year by Peter Steinberger, a well-known macOS developer, and released as an open-source project.

Having looked at it, it’s a little technical to set up, but actually not too bad if you’re patient. I would suggest having a look at the GitHub readme file to get an idea here.

The barrier to entry is almost absurdly low.

If you’ve got a solid PC, or Mac Mini or can pay for a cloud server plan, you then only need a Claude or ChatGPT subscription.

Suddenly you have an always-on AI agent that knows your workflows, your preferences, and your context for at best a couple hundred quid a year.

Much cheaper than a human assistant!

This is why the AGI conversation has flared again. Not because Clawdbot is some superintelligence, it is not that, but it is a step closer because it thinks and is proactive in how it helps you to manage your life.

It is AI stepping out of the chat box and into real life. But there are also very real and tangible investment implications of all this too.

The hardware reality most people still miss

Every Clawdbot instance runs somewhere.

Locally, or on a cloud server. It consumes compute. It consumes memory. It consumes storage. And it does so continuously.

Now zoom out from one developer running Clawdbot on a Mac mini and consider what happens when this model spreads.

Not just Clawdbot, but thousands of similar personal AI agents. If Clawdbot is the first, useful proactive AI, imagine how many come after it…

You are no longer talking about a handful of hyperscale data centres handling bursty workloads. You are talking about millions of always-on inference workloads spread across homes, offices, and edge servers.

At exactly the same moment, the hardware market is going bonkers.

Reports out of South Korea indicate that Samsung has raised NAND flash memory prices by more than 100%, with further increases already being discussed. DRAM prices continue to surge and server memory pricing has jumped sharply.

This is absolute supply stress.

Let me be clear: there is no AI bubble.

Yes, the mega-caps will benefit as the scale of these kinds of AI assistants spreads.

Companies like Micron, Samsung, SK Hynix, and Nvidia are already clear winners and will continue to do so in my view.

A trillion-dollar Micron no longer sounds fanciful if memory becomes as critical as energy.

But that is not where the most asymmetric opportunities usually emerge.

Every mega-cap started life as a small or micro-cap company.

The returns that change portfolios — and sometimes lives — rarely come from what’s already obvious. A 100% gain on Micron for example is welcome, but it does not move the needle in the same way.

The outsized returns tend to come from the overlooked segments.

Specialist memory suppliers, controller designers, photonics companies, power management companies, cooling specialists, edge networking providers, many of which have tiny companies most investors have never heard of yet.

This is where Clawdbot is an important development to know. Not just for your own potential use and benefit but it also demonstrates that AI is moving from novelty to utility.

Clawdbot is not a full blown AGI yet. But it is a glimpse of where AI is heading, toward persistence, autonomy, forward looking, predictive, proactive and integration into everyday life.

The real question isn’t whether AI demand slows. It’s how much faster it accelerates.

You just need to figure out if you have enough capital allocated, and enough risk capital in the smaller plays that have the chance to deliver the biggest outsized gains.

Until next time,

Sam Volkering

Contributing Editor, Investor’s Daily

P.S. If you missed James Altucher’s recent investor briefing, you can still watch the full session now.

In it, James walks through how he’s thinking about today’s markets — what’s quietly breaking beneath the surface, where capital is being forced to move, and how he’s positioning before the crowd catches on.

The live event may be over, but the insights are still very much in play.

You can watch the full briefing on demand while access remains open.